Generative AI often feels like magic—until you try using it in real production workflows. Very quickly, it becomes clear: it may be sufficient for creative tasks and entertainment, but it often failed when it came to precision, taxonomies, and above all, repeatability. Non-reproducible results, inconsistent terminology for identical concepts, hallucinations, or contradictions—these issues frequently made classic generative image models unreliable in the past.

This is precisely why structured data is experiencing a major renaissance. Notably, even companies like Black Forest Labs—famous for groundbreaking generative AI innovation—demonstrate how essential formal structure is for achieving reliable results.

The start-up, headquartered like us in Freiburg, is rightly seen as a true thought leader in generative AI. It was recently valued among Germany’s most promising start-ups—even though our Freiburg “neighbors” are three years younger.

The Trend: Structured Prompts become the industry standard — Example Black Forest Labs

The latest FLUX.2 release by Black Forest Labs showcases just how rapidly generative AI is evolving in the creative domain. The model is clearly designed for real production and studio use cases:

- consistent characters and styles across multiple reference images

- precise execution of complex prompts

- realistic lighting, materials, and layouts

- reliably readable typography—a long-standing weakness of earlier models

This is made possible through the combination of a latent-flow architecture with a Mistral VLM as the control layer. And this VLM layer is crucial:

Without clear structure within the input, even a cutting-edge model like FLUX.2 cannot deliver the desired precision and reproducibility.

FLUX.2 demonstrates just how important structured prompts have become— Only structure transforms generative AI from a creative machine into a reliable tool.

Even its direct competitor, the open-source model Z‑Image from China, is built on the same principle of structured input architectures.

Competitors have already paved the way

Google & Alibaba: Google’s models, including Nano Banana (Gemini 2.5 Flash Image), effectively use JSON prompts to generate hyper-realistic images. In addition, the Veo 3 video generation model accepts complex JSON instructions. Alibaba’s Qwen-Image stands out for its strong prompt conformity, making JSON ideal for product images that require strict consistency.

OpenAI: Since GPT‑3 in 2020 and increasingly with GPT‑4 Turbo, OpenAI has integrated better JSON parsing functionality directly into its models, so JSON prompting is expected to become the standard for reliable automation.

Why structure remains essential — also for analytical AI

Generative models are developed using huge amounts of structured training data. But in practical use, many users suddenly expect the models to meet all requirements without any structure—as if they were pure oracles.

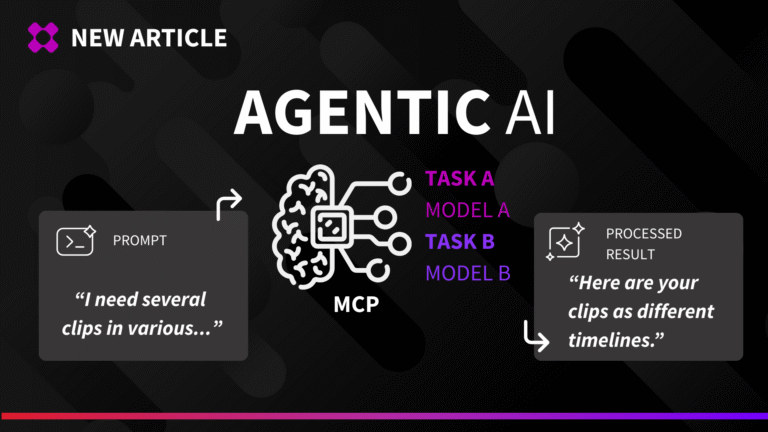

However, the more complex the tasks become, the clearer it becomes that GenAI needs structured inputs and structured outputs in order to function reliably. And DeepVA also shares the basis for this.

DeepVA Visual Understanding: Structured Metadata for real-world workflows

For AICONIX customers and partners, this commitment to structuring is implemented through the Visual Understanding Module (DeepVA), which offers a competitive advantage in media workflows.

Aiconix provides structured visual understanding by combining the flexibility of prompt-based analysis with the reliability of predefined JSON schemas for output. This approach was developed for professional media operations:

-

Workflow Automation

Structured visual data provides the necessary contextual awareness of scenes, people, and content to trigger intelligent editing decisions and downstream processes. Structured results with frame-accurate timecodes can be used to automatically generate edit decision lists (EDLs) for tasks such as cutting highlights in sports broadcasts.

-

Consistency and compliance:

The use of predefined JSON schemas guarantees a uniform tagging convention for all media types. This supports important functions for automating capture, such as content classification, logo recognition for compliance purposes, and emotion analysis.

-

Composite AI Foundation

The structured visual data serves as a building block for composite AI workflows, allowing Aiconix to seamlessly combine visual cues with other capabilities such as speech recognition and large language models to obtain richer, fully indexed media resources.

-

Sovereignty:

The ability to use VLM functions and prompt-based queries in a secure environment ensures that valuable data remains within the company.

Structured Prompting: a win for your workflows

-

1. Automated and consistent tagging according to your taxonomy

Supports content classification, logo recognition (compliance), and emotion analysis

-

Consistent, searchable metadata

Perfect for MAM/DAM, archives, or recommendation engines.

-

Faster content pipelines

Reliable data speeds up analysis, packaging, and redistribution.

-

Better training data for AI systems

Structured visual metadata is essential for robust models.

-

Intelligent editing decisions to trigger downstream processes

-

Automatic creation of Edit Decision Lists

-

Seamless combination with other functions

Structure creates the magic — not the other way around

The developments at Black Forest Labs make one thing clear:

Unstructured creativity is impressive. But structured intelligence is what creates real value. Generative AI shines when given the right framework. In media, this framework is structured input—enabling entire smart generative workflows to emerge.

Vidispine showcased this with us at IBC 2025:

Using our structured metadata from automated ingest and an intelligent workflow engine, an LLM can generate a rough cut aligned to the speaker’s script—without manual review or tedious assembly.

Thanks to structured metadata and smart reasoning, creative teams can focus on creative tasks while repetitive processes become automated.

This is exactly the approach we have successfully pursued with Visual Understanding for a year now—and, together with our many partners, are integrating into their workflows.

If you would like to learn how structured metadata and visual understanding can transform your own workflows, please feel free to contact us — we look forward to hearing from you.